TDD for infrastructure with Vagrant

Introduction

For many years (almost decades) developers have been practicing test driven development and write tests to get confidence that their code works.

However, with the rise of infrastructure as code where DevOps engineers try to describe infrastructure provisioning using code, this is something that is not observed generally. In many situations I have encountered infrastructure that

is not ephemeral

is not setup with automation tools

is not complete

is just buggy in the beginning

just fails the first deployment

So wouldn’t we love to see infrastructure development following the test driven development paradigm by

Writing infrastructure tests first

Code infrastructure

And repeat that cycle until we are done

In this post and some more posts to follow later I will look at tools for

Infrastructure Testing

Creating Virtual Machines (and discuss why this may fail with continuous delivery/deployment)

Machine Provisioning

Modeling (and testing) a pipeline

While looking at those tools I will demonstrate how to do test driven development for setting up a cluster of 3 nodes. Each of them will have a Docker engine and the ntp service installed. Finally I will try to scale that to n+ nodes and demo how to do a fully automated build for the infrastructure using a build engine.

Test driven development

Test specifications

While there are numerous test frameworks available for supporting TDD for application coding we have only a few candidates when it comes to infrastructure testing. There may be more tools, but the ones below I have found most helpful for different tasks.

chef inspec (Ruby DSL)

server-spec (Ruby DSL)

bats (bash shell script)

While the first two I judge as first class tools for testing infrastructure, I find the latter is perfect for testing shell scripts. So we could say the first two are good for integration testing while the latter allows decent unit testing.

For the upcoming experiments I will be using chef inspec which provides a DSL based on Ruby.

Target infrastructure

In order to test the provisioning scripts we need some target nodes for running the provisioning scripts and then running the test specifications against.

We can probably start off with virtual machines such as VirtualBox, HyperV, Vmware, etc. This is the traditional approach and eventually we will experience that provisioning virtual machines is quite slow and will not give a nice TDD experience. So in some follow-up posts I will move on to use containers (like Docker containers) or lightning fast KVM/firecracker based machines with weaveworks’s footloose and ignite.

VirtualBox and Vagrant

I’m going to demonstrate a full TDD cycle of writing tests, writing the infrastructure code and run the tests until everything works as specified. VirtualBox is going to be the provider for the virtual machines and Hashicorp’s Vagrant will do the work of setting up the machines.

Write some tests first

The Chef inspec DSL is pretty much self-explanatory.

ntp.rbdescribe service('ntp') do

it { should be_installed }

it { should be_enabled }

it { should be_running }

endFor executing the spec we need a target host that we connect through SSH (using a private/public key pair). We will create this host and the SSH keys in just a moment.

inspec exec ntp.rb \

--target ssh://vagrant@172.28.127.100:22 \

--key-files ../vagrant/.vagrant/machines/vagrant-node-0/virtualbox/private_keyBuild the execution environment (hosts)

Now Vagrant comes to play for creating and running the target host(s). Vagrant provides a Ruby based DSL to configure VirtualBox and the hosts. For connecting to the hosts, Vagrant also automatically creates a set of SSH private/public keys files for each node.

config.vm.provider "virtualbox" do |vb|

vb.memory = ENV['BOX_MEMORY'] || "256"

vb.linked_clone = true

end

(0..2).each do |i|

config.vm.define "vagrant-node-#{i}" do |machine|

machine.vm.hostname = "vagrant-node-#{i}"

# assign a well known IP address for ansible inventory

machine.vm.network "private_network", ip: "172.28.127.#{100+i}"

end

endTo start the hosts we run the command below and also take some timing metrics.

time vagrant upVagrant firing up the hosts the first time takes about 1 minute, 51 seconds on my decent MacBook Pro. This is way too slow given that we periodically have to teardown and recreate the machines for testing the provisioning scripts.

But let’s get the next task done, writing some infrastructure code.

Infrastructure as code with Ansible

I have decided to use Ansible as it is simple to setup and use.

But even more important we can run it agent-less.

I’m not going to explain how Ansible works, this is beyond the scope of that post.

All of the setup is in the main/ansible folder that contains Ansible playbooks and inventory.

These are the playbooks for installing the required services.

main/ansible/ntp.yml- hosts: all

become: true

roles:

- role: geerlingguy.ntp

vars:

ntp_manage_config: truemain/ansible/docker.yml- hosts: cluster_nodes

become: true

roles:

- role: geerlingguy.docker

vars:

docker_install_compose: false

docker_users:

- "{{inventory_hostname_short}}_ansible"The ansible inventory describes the set of hosts and how to connect.

main/ansible/inventory/vagrant/hosts.ymlall:

hosts:

children:

cluster_nodes:

hosts:

vagrant-node-0:

vagrant-node-1:

vagrant-node-2:Host or host group specifics are as follows.

main/ansible/inventory/vagrant/group_vars/hosts.ymlansible_user: 'vagrant'

ansible_port: '22'

ansible_become: true

# the following sh_common_args variable is important so that no local ~/.ssh/config is interfering with the inventory

ansible_ssh_common_args: '-F /dev/null'main/ansible/inventory/vagrant/host_vars/vagrant-node-0.ymlansible_host: '172.28.127.100'

ansible_ssh_private_key_file: '../vagrant/.vagrant/machines/vagrant-node-0/virtualbox/private_key'Provision the hosts

Ansible target hosts require python to be installed before we can run playbooks.

cd ansible

ansible --become -m raw \

-a "ln -sf /bin/bash /bin/sh && apt-get update && apt-get install -y python3 gnupg2" \

--inventory=inventory/vagrant/hosts.yml allNow we run the playbooks for the inventory (all vagrant hosts).

ansible-playbook -i inventory/vagrant/hosts.yml ntp.yml docker.ymlTest the hosts

With the hosts being provsioned we can now run the test specifications.

cd inspec

inspec exec ntp.rb docker.rb \

--target ssh://vagrant@172.28.127.100:22 \

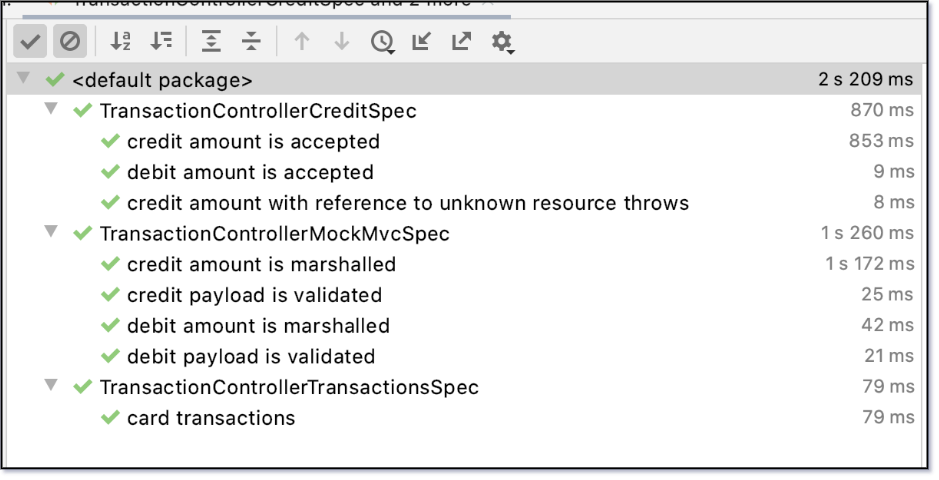

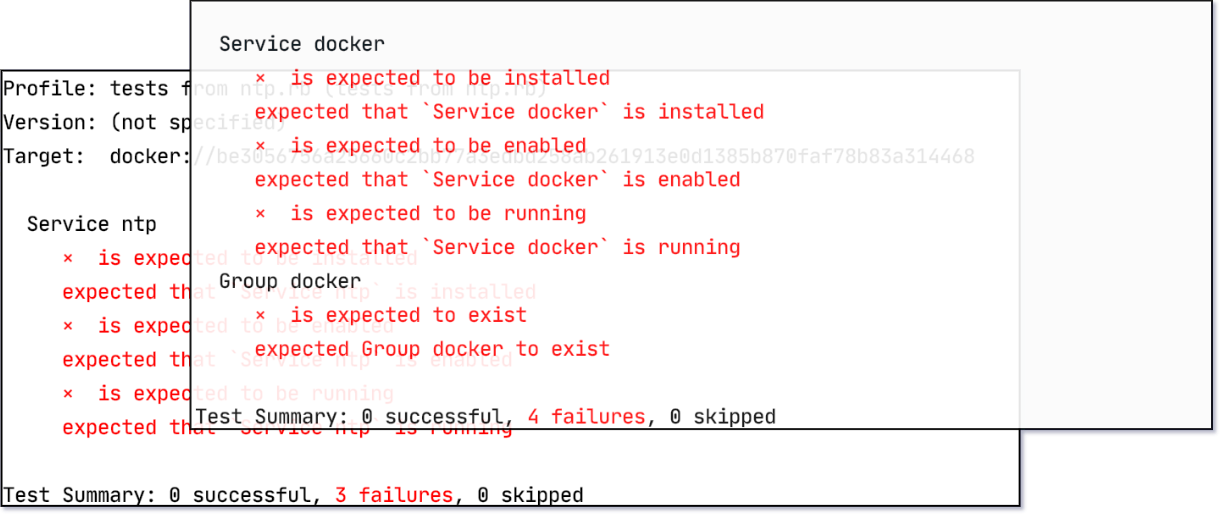

--key-files ../vagrant/.vagrant/machines/vagrant-node-0/virtualbox/private_keyWe are rewarded with a hopefully green test indicator for each test. It hopefully looks like below:

Profile: tests from ntp.rb (tests from ntp.rb)

Version: (not specified)

Target: ssh://vagrant@172.28.127.100:22

Service ntp

✔ is expected to be installed

✔ is expected to be enabled

✔ is expected to be running

Test Summary: 3 successful, 0 failures, 0 skippedWrapup

With VirtualBox (any many other virtualization software) and Vagrant for managing virtual hosts, and chef inspec for testing I have successfully exercised test driven development for infrastructure.

It is obvious that provisioning a virtual machine is a process where we have to accept that going through a full TDD cycle takes more time than we wanted. So this is not a nice user experience. We also have to question if this approach can be implemented in a continuous integration pipeline. A continous integration pipeline means that a build service would have to create virtual machines for testing provisioning.

In the next post in this series I will try to look into the option of running the hosts as containers.